Privacy and Robustness in Federated Learning: Attacks and Defenses

Privacy and Robustness in Federated Learning: Attacks and Defenses abstract。

解决的问题

传统的中心式的机器学习方法无法有效处理隐私问题,联邦学习作为一种替代方案近年来得到发展。但是隐私问题并没有得到根本的解决,因此本文针对联邦学习面对的攻击和对应的防御手段对近5年的研究做出综述。

Introduction

根据分布式情况对联邦学习分类

- Horizontally federated learning, HFL

- HFL to business, H2B

- HFL to consumers, H2C

- Vertically federated learning, VFL

- Federated transfer learning, FTL

根据架构情况对联邦学习分类

- FL with Homogeneous Architectures

- FL with Heterogeneous Architectures

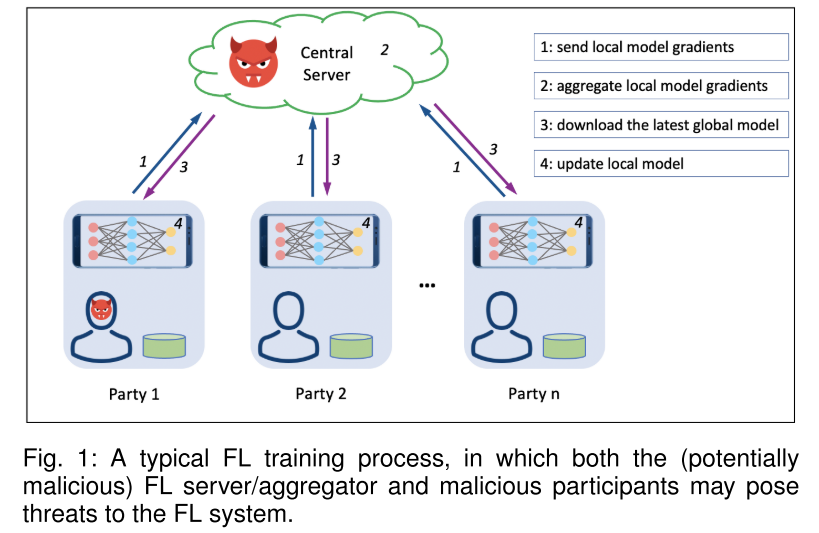

联邦学习面临的威胁

面临的威胁主要来自两个方面:

- malicious server

- adversarial participant

面临的主要攻击是:

- data poisoning

- model poisoning

Threat Model

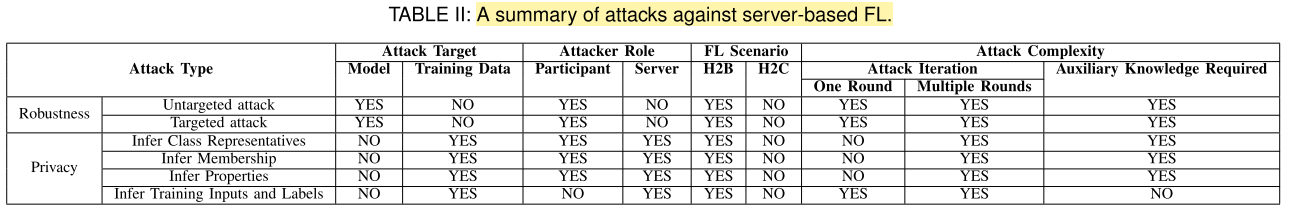

从几个侧面对攻击者进行归类:

- Insider vs Outsider

- Training phase vs Inference phase

- Privacy: semi-honest vs malicious

- Robustnetss: untargeted vs targeted

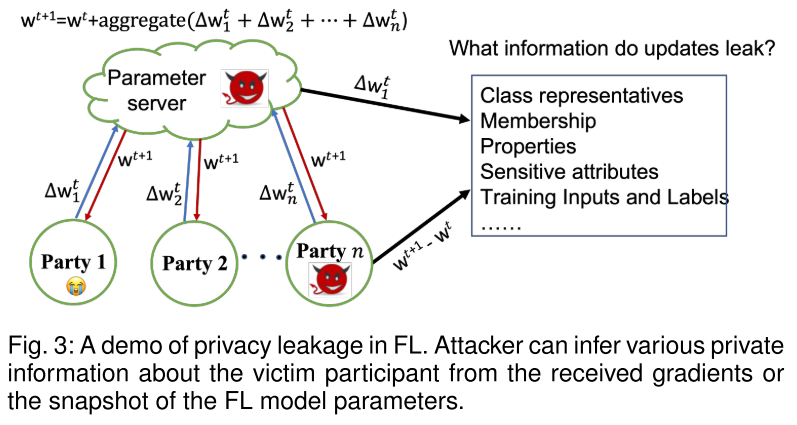

Privacy Attacks

针对隐私的攻击有:

- Inferring Class Representatives

- Inferring Membership

- Inferring Properties

- Inferring Training Inputs and Labels

Defences against privacy attacks

- Homomorphic Encryption

- Secure Multiparty Computation, SMC

- Differential Privacy

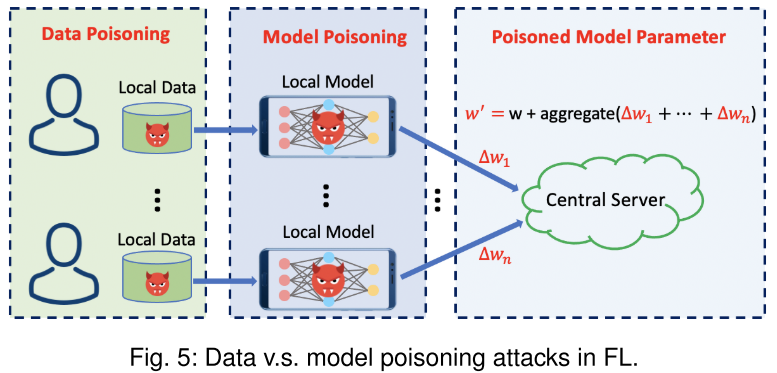

Poisoning attacks

投毒攻击有:

- Untargeted, 无针对目标,旨在损害模型准确率和性能

- Targeted,有目标的,如后门攻击

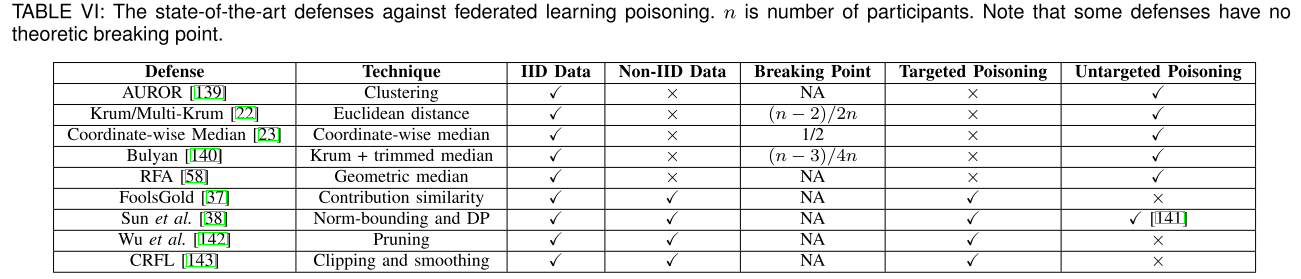

Defences against poisoning attacks

Discussion and Promising Direction

- Curse of Dimensionality

- Rethinking Current Privacy Attacks

- Rethinking Current Defences

- Optimizing Defence Mechanism Deployment

- Test-phase Privacy in FL

- Test-phase Roubustness in FL

- Relationship with GDPR

- Threats and Protection of VFL and FTL

- Vulnerabilities to Free-riding Participants

- More Possibilities in FL with Heterogeneous Architectures

- Decentralized FL

- Efficient FL with Single Round Communication

All articles in this blog are licensed under CC BY-NC-SA 4.0 unless stating additionally.